Deep learning in the NLP field has made a good calculation linguists panicked?

(Original headline: Deep learning is very successful in the NLP field. Shouldn't Computational Linguists be alarmed?)

Deep learning wave

Over the years, the wave of deep learning has been impacting computational linguistics, and it seems that 2015 is the year that this wave has hit the NLP conference. However, some experts predict that the damage it will bring will ultimately be even worse. In 2015, in addition to the ICML conference in Lille, France, there was another event that was almost as big: the 2015 Deep Learning Workshop. The seminar ended with a panel discussion, as Neil Lawrence said on the panel: "The NLP is kind of like a rabbit in front of a deep learning machine's headlights, waiting to be flattened." Clearly, The computational linguistics community needs to be cautious! Will it be the end of my path? Where are these roller-like predictions coming from?

At the opening of the Paris artificial intelligence laboratory in Paris in June 2015, Yann LeCun said: “The next big step in deep learning is natural language understanding. It is not just the ability to understand a single word, but the entire sentence and paragraph. Ability."

In the Reddit AMA (Ask Me Anything/Q&A) Q&A for November 2014, Geoff Hinton said: “I think the next five years, the most exciting area will be to understand the text and video. If we still have 5 years I would be disappointed not to be able to say what happened after watching the YouTube video. Within a few years, we will place deep learning on a chip that can be put into the ear and create a Babel fish ( The Galactic Roaming Guide shows that if you put a Babel fish into your ears, you can immediately understand anything you say to you in any form of language.) That kind of English decoding chip."

In addition, Yoshua Bengio, another dean of modern deep learning, has gradually increased their team's research in language, including recent exciting new research on the neural machine translation system.

From left to right: Russ Salakhutdinov (Assistant Professor, Department of Machine Learning, Carnegie Mellon University), Rich Sutton (Professor of Computer Science, University of Alberta), Geoff Hinton (Cognitive Psychologist and Computer Scientist working at Google), Yoshua Bengio ( Computer scientist who is well-known for his work on artificial neural networks and deep learning) and Steve Jurvetson, the host of a panel discussing machine intelligence in 2016, at the heart of the machine reported on the forum at the time. See: Exclusive | Hinton, Giants such as Bengio and Sutton gather in Toronto to achieve the next goal of artificial intelligence through different paths

Not just deep learning researchers think so. The machine learning leader Michael Jordan was asked in September 2014 on the AMA Q&A. "If you got a $1 billion investment in a big project in research, what would you like to do?" he replied, "I will use this 10 Billion dollars to establish a NASA-level project that focuses on natural language processing, including all aspects (semantic, pragmatic, etc.). He continued to add, "I am very rational that NLP is so fascinating that we can focus on highly structured The question of inference is straight to the core, but it is obviously more practical. It is undoubtedly a technology that can make the world better.†Ah, it sounds good. So, should researchers of computational linguistics be afraid? I think, no! Going back to Geoff Hinton's Babel fish mentioned earlier, we have to take a look at the “Guide to the Galaxy Roaming†and write “Do not panic†on the cover with a big, friendly word.

Deep learning success

In the past few years, deep learning has undoubtedly opened up amazing technological progress. I will not go into detail here, but give an example. A recent blog from Google introduced Neon, the new transcription system for Google Voice. After admitting that the old version of Google Voice voice mail was not smart enough, Google introduced the development of Neon in a blog, which is a voice mail system that can provide more accurate transcription. For example, "(Neon) uses a deep loop of long and short-term memory. With the neural network (sigh of relief, whew!), we have reduced the error rate of transcription by 49%.†We are not all dreaming of developing a new method that can reduce the error rate of the previous top results by half?

Why computational linguists don’t need to

Michael Jordan gave two reasons in the AMA to explain why he believes that deep learning cannot solve the NLP problem. "Although current deep learning studies tend to center around NLP, (1) I still don't believe that its results on NLP are stronger than Visually; (2) I still don't believe that in the case of NLP is stronger than vision. This approach is to combine massive data with the black box learning architecture.†In the first argument, Jordan is correct: currently, in high-level languages In terms of processing problems, deep learning cannot reduce error rates as much as voice recognition or visual recognition. Although there are results, it is not as abrupt as a 25% or 50% error rate reduction. And it is easy to meet this situation will continue. The real huge gains may only be possible on signal processing tasks.

People in the field of linguistics, people in the NLP field, are the true designers.

On the other hand, the second one is me. However, I do have two reasons for why NLP doesn't need to worry about deep learning: (1) NLP is the problem area that needs to be focused on the most intelligent people in our field who are most influential in machine learning. This is wonderful; (2) Our field is the domain science of language technology; it is not the best method for machine learning - the central issue is still the domain problem. The problem in this area will not disappear. Joseph Reisinger wrote on his blog: “I often do general machine learning at start-up companies. Frankly, this is a rather ridiculous idea. Machine learning is not indiscriminate and it is not as commercial as EC2. And it's closer to design than coding."

From this point of view, people in the field of linguistics and people in the NLP field are the true designers. The recent ACL meeting has paid too much attention to quantity and attention to breakthrough top results. It can be called Kaggle contest. More efforts in this area should focus on issues, methods, and architecture. One of the things that I and my collaborators have been focusing on recently is the development of universal dependencies. The goal is to develop generic syntax-dependent characterization, POS, and feature tagging sets. This is just an example. There are other design efforts in this area, such as the idea of ​​Abstract Meaning Representation.

Deep learning of language

In what ways does deep learning help natural language processing? From the point of view of using distributed word representations, that is, using real-valued vector representation terms and concepts, so far, NLP has not gained much from deep learning (using more abstract hierarchical representations to enhance generalization capabilities). If the similarities between all words have dense and multi-dimensional representations, they will be very useful in but not limited to NLP. In fact, the importance of distributed representation evokes the "distributed parallel processing" wave of early neural networks, and those methods have more cognitive science-oriented focus (Rumelhart and McClelland 1986). This method can not only better explain the generalization of humans, but also from the engineering point of view, using small dimensions and dense word vectors allows us to model large-scale contexts, thereby greatly improving the language model. From this perspective, improving the order of the traditional word n-gram model will result in exponential sparseness and appear to be conceptually bankrupt.

Intelligence needs to be able to understand the whole big thing from knowing the small part.

I do believe that depth models can be useful. The sharing that occurs in the depth characterization can theoretically give exponentially characterization advantages and actually improve the performance of the learning system. The general approach to constructing a deep learning system is excellent and powerful: in an end-to-end learning framework, researchers define the model's architecture and the best loss function, and then perform self-organizing learning on the parameters and representations of the model. To minimize this loss. We will next learn about the deep learning system recently studied: neural machine translation/Sutskever, Vinyals, and Le 2014; Luong et al 2015.

In the end, I have always advocated more attention to the semantic synthesis of models, especially languages ​​and general artificial intelligence. Intelligence needs to be able to understand the whole big thing from knowing the small part. Especially language, the key to understanding novels and complex sentences lies in the ability to construct overall meaning from smaller parts (words and phrases).

Recently, many papers have shown how to improve system performance from distributed word representations of "deep learning" methods, such as word2vec (Mikolov et al. 2013) or GloVe (Pennington, Socher, and Manning 2014). However, this is not to build a deep learning model. I also hope that more people will pay attention to the issue of strong linguistics in the future, that is, whether we can build semantic synthesis functions on deep learning systems.

Connect scientific problems of computational linguistics and deep learning

I do not encourage people to study using word vectors to increase performance. I suggest that we can return to some interesting linguistic and cognitive issues that will promote the development of non-classical representations and neural network methods.

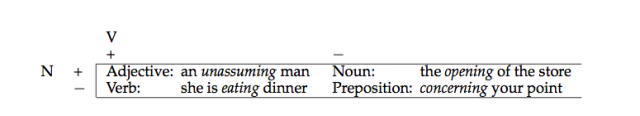

An example of non-categorical phenomena in natural language is the POS of the verb V-ing form (eg driving). This form is ambiguous in its classical description of verb forms and noun nouns. In fact, the real situation is more complicated because the V-ing form can appear in the four core categories of Chomsky (1970):

More interestingly, there is evidence that it is not only ambiguous, but also mixes the noun-verb state. For example, classical linguistic texts that appear as nouns appear alongside qualifiers, and classical linguistic texts that are verbs use direct objects. However, it is well known that noun nounization can do two things at the same time:

1. The not observing this rule is that which the world has blamed in our satorist. (Dryden, Essay Dramatick Poesy, 1684, page 310)

2. The only mental provision she was making for the evening of life, was the collecting and transcribing all the riddles of every sort that she could meet with. (Jane Austen, Emma, ​​1816)

3. The difficulty is in the getting the gold into Erewhon. (Sam Butler, Erewhon Revisited, 1902)

This is usually done through some sort of change operation in the hierarchy of the phrase structure tree, but there is evidence that this is actually a case of non-classified behavior in the language.

Indeed, this explains the "squish" case used earlier by Ross (1972). Diachronically, the V-ing form shows a history of verbalization, but in many periods it shows a very discrete state. As we have found in this area a clear evaluation judgment:

4. Tom's winning the election was a big upset.

5. This teasing John all the time has got to stop.

6. There is no marking exams on Fridays.

7. The cessation hostilities was unexpected.

Many combinations of qualifiers and verb objects do not sound very good, but they are still much better than nominalizing objects by derivation of morphemes (such as -ation). Houston (1985, page 320) shows that the distribution of V-ing form to discrete part of speech classification is much worse than that of continuous interpretation in the language alternation of -ing and -in (predictive sense). He also believes that "the grammatical categories exist in a continuum and they have no clear boundaries between categories."

One of my graduate students, Whitney Tabor, discussed a different and interesting case. Tabor (1994) studied the difference between the use of kind of and sort of usage, which I used in the introductory chapter of the 1999 textbook (Manning and Schutze 1999). The noun kind or sort can constitute a noun phrase, or as a restriction of the adverbial modifier:

8. [That kind [of knife]] isn't used much.

9. We are [kind of] hungry

Interestingly, there are reanalysis paths for ambiguous forms, such as the following corpus, which show how one form emerges from another.

10. [a [kind [of dense rock]]]

11. [a [[kind of] dense] rock]

Tabor (1994) discusses the existence of kind in classical English but little or no use of kind of. From the beginning of medieval English, the context of ambiguity that provided the ground for reanalysis began to appear (case of (1) is the 1570 statement), and the subsequent non-ambiguous case restrictive modifier appeared (case (14) is 1830 Statement):

12. A nette sent in to the see, and of alle kind of fishis gedrynge (Wyclif,1382)

13. Their finest and best, is a kind of course red cloth (True Report, 1570)

14. I was kind of provoked at the way you came up (Mass. Spy, 1830)

This is a history of no synchrony.

Readers, have you noticed the example I quoted in the first paragraph?

15. NLP is kind of like a rabbit in the headlights of the deep learning machine (Neil Lawrence, DL workshop panel, 2015)

Whitney Tabor uses a small deep-loop neural network (with two hidden layers) to model this evolution. He completed the study with the opportunity to work with Stanford's Dave Rumelhart in 1994.

Just recently, there have been some new research efforts aimed at harnessing the power of distributed representation for modeling and interpreting linguistic differences and changes. In fact, Sagi, Kaufmann, and Clark (2011) used a more traditional research method, latent semantic analysis (Latent Semantic Analysis), to generate distributed lexical representations to show how distributed characterization can capture certain semantic changes: Over time, the scope of the alleged object has expanded and contracted. For example, in Old English, deer refers to any animal, but in medieval and modern English, the word was used to clearly refer to a family of animals. The meaning of dog and hound is tuned: In medieval English, hound was used to refer to any kind of canine, but now it is used to refer to a specific subclass. The use of dog is just the opposite.

Now that NLP is so critical to machine learning and industrial applications, we should be excited and happy to live in such an era.

Figure 1: The cosine similarity between cell and four other words varies over time (where 1.0 denotes the maximum similarity and 0.0 denotes no similarity).

Kulkarni et al. (2015) used neural word embeddings to model the shift in meaning, for example, the shift in the meaning of gay in the past century (according to the Google Books Ngrams corpora). In a recent ACL workshop, Kim et al. (2014) used a similar approach - using word2vec - to see recent changes in word meaning. For example, in Figure 1, around 2000 they showed how the cell's word sense quickly changed from close to closet and dungeon to close to phone and cordless. The meaning of a word in this context is an average over all the meaning of the word and is weighted by using frequency.

There are more and more scientific applications of distributed characterization. Using deep learning to model linguistic phenomena is the two major features that emerged before neural networks. Later, due to the chaos on the Internet to cite and determine deep learning research, I think there are two people who are almost no longer mentioned: Dave Rumelhart and Jay McClelland. Starting from the parallel distributed processing research group in San Diego, their research project aims to study neural networks from a more scientific and cognitive perspective.

Is it appropriate to use neural networks to solve the rule-governed linguistic behavior problem? Now, researchers have raised some good questions about this. Older researchers should remember that the debate about this issue many years ago made Steve Pinker famous and laid the foundation for his six graduate careers. Limited space, I will not start here. However, judging from the results, I think that argument is fruitful. After the controversy, Paul Smolensky conducted a lot of research on how the basic classification system emerged and how it can be characterized in a neural matrix (Smolensky and Legendre 2006). In fact, people think that Paul Smolensky is too deep in rabbit holes. He devoted most of his energy to studying a new phonological classification model, Optimality Theory (Prince and Smolensky 2004). Many of the early scientific research work was neglected, and in the field of natural language processing, it is good to go back and emphasize the importance of language cognition and scientific investigation rather than almost completely using research engineering models.

All in all, I think we should be excited about the era in which natural language processing is regarded as the core of machine learning and industrial application problems. Our future is bright, but everyone should think more about the details of problems, architecture, cognitive science and human language. We need to explore how language learns, handles, and how changes are made, rather than hitting the industry's best in benchmark tests time and time again.

燑br>

燑br>

M.2 NVME SSD Enclosure NGFF SSD Enclosure

Ssd Enclosure,M.2 Ssd Enclosure,Type C M.2 Ssd Enclosure,M.2 Nvme Ssd Enclosure

Shenzhen GuanChen Electronics Co., Ltd. , https://www.gcneotech.com