Huang Renxun's Six Summary of Artificial Intelligence: How GPUs Catalyze AI Calculations | GTC China 2016

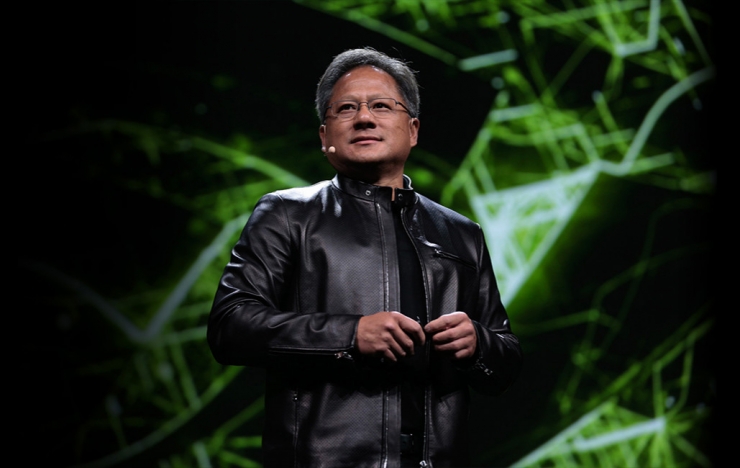

Editor's note: Lei Feng Network (search "Lei Feng Network" public concern) September 13, 2016 NVIDIA held a GPU Technology Conference in Beijing, which was the first time that GTC was held in China. At this conference, NVIDIA released the Tesla P4 and P40 deep learning chips. In addition, Huang Renxun also shared his knowledge of GPU and future computing with tens of thousands of AI and game industry developers in Beijing. The following content was compiled according to Huang Jenxun's speech at GTC China 2016.

One or four years ago, AlexNet brought the first outbreak of deep learning

In 2012, a young researcher was called Alex Krizhevsky. At the University of Toronto's AI Lab, he designed a learning software that can be visually identified on his own. Deep learning has been developed for a period of time. It may be 20 years.

This network designed by Alex, it has a layer of neural network, including convolutional neural network, excitation layer, input and output, can be distinguished. Such a neural network can learn to recognize images or patterns. The result of the deep neural network is that it will be very effective and beyond your imagination, but it requires more computing resources than modern computers. It takes months to train a network to truly recognize it. image.

Alex's view was that there was a new type of processor called GPU, which was a computational model called CUDA that could be applied to parallel computing for very intensive training. In 2012 he designed a network called Alex and submitted it to a large-scale visual recognition competition. He was a global competition and won the competition.

AlexNet defeated all algorithms developed by other computational vision experts. Alex used only two NVIDIA GTX580s. After a few days of training with data, AlexNet's results and quality attracted attention. All the AI ​​scientists who are engaged in computational vision are very concerned. In 2012, Alex Krizhevsky started the foundation for deep learning of computers, a big explosion of modern AI. His work and achievements have caused great repercussions throughout the world.

I believe that moment will be remembered because it does change the world. Afterwards, many studies began to focus on deep learning. In 2012, Professor Wu (Wu Enda) of Stanford University and us developed a very large-scale GPU configuration for deep learning training. Soon after three years, a new network will come out and continue to beat other solutions. Get a better record.

Second, sound and visual input laid the foundation for constructing the AI ​​world

By 2015, both Google and Microsoft have achieved human visual identity. It is written by software and trained on the GPU can achieve higher visual recognition capabilities than humans. In 2015, Baidu also announced that their speech recognition has reached a level beyond humanity, which is a very important event. This is the first time that computers can write their own programs to achieve levels beyond humanity.

Vision and speech are two very important sensory inputs and are the basis of human intelligence. Now that we have some basic pillars, we can further advance the development of AI, which was unimaginable before. If the input values ​​of sound and vision are unreliable, how can the machine be able to learn and behave like humans? We believe that this foundation has already existed, which is why we believe that this is the beginning of the AI ​​era.

Researchers all over the world have seen these results, and now all AI labs are starting to use GPUs to run deep learning so that they can begin to build the foundation of future AI. Basically all AI researchers started using our GPU.

The core of the GPU is to simulate the physical world. We use GPUs to create virtual worlds for games, designs, and storytelling, such as making movies. Simulating the environment, simulating physical properties, simulating the world around you, and building the virtual world are like the calculations that the human brain performs when it comes to imagination. Because of the development of deep learning, our work has entered a new stage, artificial intelligence. The simulation of human intelligence is one of the most important tasks we have done, and we are very excited about it.

Third, GPU computing penetrates into all areas of deep learning

Today is also the first time we hold a GTC conference in China. This time, a large part of it will be about artificial intelligence and deep learning. We are a computing company. The SDK is the most important product for us. The GTC is our most important event. We can look at the growth of the past few years. This is a very impressive growth rate.

This year GTC has 16,000 participants. Developers who downloaded our SDK grew threefold and reached 400,000 developers. But the greatest figure is that deep learning developers have grown 25 times in two years. Developers who have downloaded our deep neural network lab engine have now grown 25 times and downloaded 55,000 times.

What exactly do you use it for? Many of them are AI researchers. They come from all over the world. Now all labs use our GPU platform to do their own AI research. There are software companies, internet software providers, internet companies, car companies, governments, Medical imaging, finance, manufacturing and other companies. The field of deep learning with GPUs is now very extensive and amazing.

Fourth, the operation of the brain is like GPU computing

Everyone needs to ask why AI researchers chose GPUs. Alex found that parallel computing of GPUs is actually very much in line with the computational characteristics of deep learning networks. So further, why is GPU a very suitable tool for deep learning? I want to give you a not-so-serious example to explain why the GPU is very important.

The brain is like a GPU. For example, I asked everyone to imagine table tennis. Everyone closes their eyes. Your brain will form a picture of several people playing table tennis. If you imagine the Kung Fu Panda, a similar Kung Fu Panda image will appear in your mind. So our brain generates some pictures when we think about it. In turn, the GPU's architecture is also like a brain. It is not a sequence of operations by a single processor. Our GPU has thousands of processors, and very small processors combine to solve problems together. The device will perform mathematical calculations, connect to each other, share information, and ultimately solve a big problem, as if it were our brain. So the brain is like a GPU, because the brain can produce images, and the GPU is like the human brain, so it is possible that this new computing model, a new computing model can solve the problem of virtual reality, it is indeed very suitable for GPU .

Deep learning is a new computing model that involves all aspects of software. Deep learning must first design a network and train the network. The training of a network requires billions or even more operations. It involves millions or even more data. It takes a long time to train the network through these data. time. If there isn't a GPU, this process may take several months, but the GPU compresses this time to a few days, which is why the GPU can help everyone solve problems better.

V. Finding a faster computing model than Moore's Law

Training is the foundation of deep learning. After the network has been established, you want to use this network to make predictions, reasoning, and categorize. You need to reason about a message. For example, there are billions of people who ask a lot of questions online every day. There may be pictures, texts, and voices. In the future, it may be in the form of video. GPU reasoning can respond very quickly in the data center. So the first part of deep learning is training, and the second part is reasoning.

In the third part of deep learning, some people call IoT, smart devices, smart terminals, perhaps cameras, cars, robots, and perhaps microphones, so that connected devices become smart devices. The Internet of Things needs to be driven by AI and it needs deep neural network to drive. The fundamental goal of a large number of smart terminals is to identify and classify interactions, and it is necessary to be accurate and achieve all these functions in a low-power state.

In the next period of time, the development of software will be different from before. We will not be able to run the software in the same way. The above operations will also be different. There will be different things on many devices, so deep learning will Affects all aspects of computing.

Now we look at training. First of all we should be aware of the complexity of training. As mentioned earlier, training may be a multi-billion or even trillion operation. The larger the model is, the more data there are. The more accurate the result will be, the more data will be added, and the large model and large amount of calculation will bring better results for deep learning. This is very fundamental and very important.

Microsoft has an identification network called ResNet. If compared with AlexNet, the AlexNet neural network is 8 layers. The total computational volume is 1.4G floating-point arithmetic, and the error rate is 16%. 8 layers and 1.4G, error rate is 16%, what does it mean? This was the best at the time. At the time, most of the algorithms developed by computational vision experts were likely to have a higher error rate than 16%. This shows that the limitations of using conventional computational vision methods are so great that the accuracy is not as high.

If we go through deep learning, we can achieve an error rate of 3.5% in the past few years, 3.5% which is a network of 152 layers tested in millions of images, only 8 layers a few years ago, now 152 layers, The total computational power is 22.6 G/flps, which is an 18-fold increase, which indicates the problem of deep learning. The calculation load for deep learning within three years has increased by 18 times, which is much faster than Moore's Law.

So the problem becomes more and more complex and more and more difficult, but the computing power does not have a corresponding increase in speed, which is why the entire industry began to look for new computing models, for they all began to consider using GPU computing.

Another case is even more striking about speech recognition. Speech recognition is the foundation of natural language understanding. Natural language understanding is also the basis of intelligence. This is the work of Baidu Wu Enda's laboratories. In 2014, there were 25 million parameters in this model, and the training material was an error rate of 8% of the 7000-hour corpus. The training data for 2015 is twice as many as before. The deep learning network is 4 times larger than the original, 2 times the amount of data, 4 times the complexity of the network, and the error rate achieved is 5%. Within 1 year, Baidu’s The DPS error rate dropped to about 40%. But what kind of price does it cost? It is the increase in calculations.

The method of deep learning took so long to really come into existence, because such a method cannot be implemented in terms of calculation conditions. No computer can train such a network until the GPU appears for deep learning. . This is why I'm talking about why we're very excited about this new computing model. Why is this moment important in our computing industry?

At the same time, this trend will continue in the future. We must remember that we are still 5% error rate, and we hope that the error rate is 0%. Everyone's voice can be identified, even better, and the semantics of the word can be understood, so we have a lot more computing needs.

AI will change the computing system from top to bottom

Pascal is our GPU architecture optimized for deep learning. Pascal's processor is a real miracle. Pascal is a brand new architecture. It is made of three-dimensional transistors. It uses a three-dimensional package and a 3D stack. All of this makes our Pascal architecture a huge performance boost. New instructions. Levels and new manufacturing methods, new packaging methods, and new interconnections connect multiple GPUs so that they can work as a team. We spent 3 years and 10,000 individuals invested in this year's largest work in our own history.

We also recognize that processors are only the beginning. In the AI ​​calculation this piece has such a new calculation mode, the computing system architecture will also change, the design of the processor will change, the algorithm will change, the way we develop the software will change, the system design will also change .

We have a new supercomputer. Within the size of a box, this is called DGX-1. Instead of about 250 servers, the entire data center has shrunk to the size of a small box. This supercomputer is completely redesigned. Looking at our processor's achievements plus DGX-1, we have achieved a 65-fold increase in performance over the course of a year. This is a 65-fold increase compared to when Alex first used our GPU to train his network. This is much faster than Moore's Law, much faster than the development of the entire semiconductor industry, and much faster than any other progress in computing.

Outdoor Tv,Outdoor High Brightness Tv,Waterproof Tv,Outdoor Sunlight Readable Tv

Shenzhen Risingstar Outdoor High Light LCD Co., Ltd , https://www.risingstarlcd.com