With the Japanese technology, robots can also recognize human faces in the dark

In today's robotics world, face-based face recognition and detection based on vision has developed very rapidly and has been used in many fields.

Now, face recognition has been applied to such occasions as payment, remote business management, security and access control. After nearly two months of trial operation, the security face recognition system has recently been put into use at the Shenzhen Bao'an International Airport's domestic security inspection area. This is also the first security information system to embed the face recognition system in the airport. Many people say that we usher in the era of brushing. At that time, ticket inspections, entry barriers, and shopping payments... In many similar scenarios, you can get by simply “brushing†your face.

However, for the time being, the robot can successfully achieve facial recognition because it is already under normal lighting conditions. In order to further develop this technology, we also need to overcome an obstacle: how can we face success in the case of uncertain light? Therefore, before we reach the era of brush face, we need to develop an algorithm that enables the robot to perform facial recognition under abnormal lighting conditions.

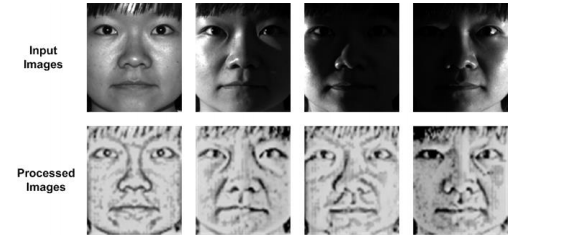

Now, researchers at the School of Computer Science and Engineering at Toyohashi University of Science and Technology in Japan have developed a cool technology that can adjust the effects of light on human faces by applying a reflection model. This model has a variable lighting ratio and is controlled by the FIS (Fuzzy Inference System). This system can use GA (Genetic Algorithm) to enable the robot to perform face recognition under different lighting conditions.

The research team leader Bima Sena Bayu Dewantara stated:

In order to reduce the influence of light on face recognition, we should adjust the image contrast appropriately. For example, in order to produce a stable facial image, we need to brighten the cheeks and dim the eye's rays. This can be achieved with the reflection model we use. Combining FIS and GA is also very important for operating this model.

Another head Professor Jun Miura said:

By adjusting the image contrast, we can improve the accuracy and effectiveness of facial recognition and detection, and can also be used in real time in the field of robot face recognition and human-computer interaction.

Human faces can not only distinguish themselves from other people, but also provide other information: If you are tired, are you happy or sad, peaceful or nervous? And capturing this information contained in the face is crucial for human-computer interaction. The researchers said that by adjusting the image contrast, the robot can perform facial recognition and detection under different conditions, especially under conditions of insufficient lighting. On July 15th, the team published the results of its research on Machine Vision and Its Applications.

In fact, Toyohashi University of Technology has already made some achievements in human-computer interaction. Last year, the university’s Interaction and Communication Design Laboratory developed a robot called “Talking Ally†that can observe the user’s behavior and respond accordingly.

Its appearance looks like the jumping lights in Pixar's well-known animated short film "Naughty Jumping Lights." It has an elliptical head, the body is supported by a spring, and it has a good elasticity. The robot can flexibly rotate the body. It has an eye, a camera in the eye, the camera will always focus on the user, Talking Ally will recognize the human face and expression. The purpose of Talking Ally was designed mainly to keep the user focused. By observing where the user's eyes are falling, the robot will determine where his attention is and interact with it.

It seems that with the rapid development of human-computer interaction, the era of brushing is no longer out of reach. But to get there, you have to overcome one difficulty.