Which one is better, Keras or TensorFlow?

Deep learning is developing rapidly, but many deep learning frameworks that have emerged in the past two years have left beginners at a loss. Such as Google's TensorFlow, Amazon's MXNet, Facebook's PyTorch, Theano, Caffe, CNTK, Chainer, Baidu's PaddlePaddle, DSSTNE, DyNet, BigDL, Neon, etc.

Among them, TensorFlow, as the most famous framework for deep learning production environments, has a very strong ecosystem support. However, compared to other frameworks, TensorFlow also has its disadvantages, such as slower speed and difficult to use. Keras, built on the basis of TensorFlow, provides a simple and easy-to-use API interface, which is very suitable for beginners to use.

In January 2017, with the announcement of a message from the author of Keras and Google AI researcher Francois Chollet, Keras became the first high-level framework to be added to the core of TensorFlow, and Keras has since become the default API of Tensorflow.

"So, should I use Keras or TensorFlow in my project? Which one is better, Keras or TensorFlow? Should I spend my time studying TensorFlow or Keras?"

In conversations with deep learning researchers and practitioners, including engineers, Adrian Rosebrock, the author of the book "Deep Learning for Computer Vision with Python", heard their confusion.

As far as Keras and TensorFlow are concerned, Rosebrock believes that developers should pay more attention to when Keras has actually been fully adopted and integrated into TensorFlow, they can:

Use Keras's easy-to-use interface to define the model.

If you need a specific function of TensorFlow, or need to implement a custom function that Keras does not support but TensorFlow supports, then call TensorFlow.

His advice is to use Keras first, and then download TensorFlow to get any specific features that may be needed. In the text, Rosebrock shows how to train a neural network using Keras and a model using the Keras+TensorFlow integration (with custom functions) built directly in the TensorFlow library.

Start the text below:

â–ŒIt is meaningless to compare Keras and TensorFlow

In the past few years, researchers, developers, and engineers in the field of deep learning must often make some choices:

Should I choose a Keras library that is easy to use but difficult to customize?

Or should I use the more difficult TensorFlow API and write a lot of code? (Not to mention an API that is not so easy to use.)

If you are stuck with the question "Should I use Keras or TensorFlow", you can take a step back and look again. In fact, this is a wrong question because you can choose to use both at the same time.

I will use the standard keras module and tf.keras module based on TensorFlow to implement a convolutional neural network (CNN). Then, train these CNNs based on a sample data set, and then check the results, you will find that Keras and TensorFlow can coexist harmoniously.

Although TensorFlow has announced that Keras will be incorporated into the official release version of TensorFlow since more than a year ago, I am surprised that there are still many deep learning developers who do not realize that they can be called through the tf.keras submodule. Keras. More importantly, Keras and TensorFlow are seamlessly connected, allowing us to write the source code of TensorFlow directly into the Keras model.

When combined with Keras in TensorFlow, there will be a win-win effect:

You can use the simple, native API provided by Keras to create your own models.

Keras's API is similar to scikit-learn's, and both can be called high-quality APIs for machine learning.

Keras's API is modular, based on Python, and extremely easy to use.

When you need to implement a custom layer or more complex loss function, you can use TensorFlow in depth to automatically combine the code with the Keras model.

â–ŒKeras is built into TensorFlow through the tf.keras module

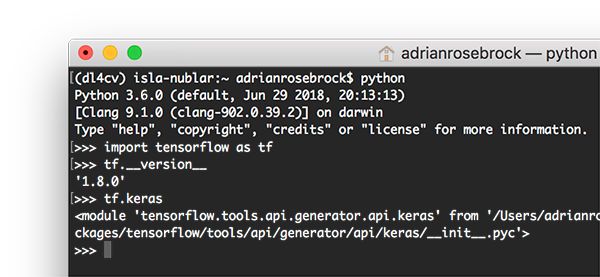

As you can see, by introducing TensorFlow (tf) and calling tf.keras, we showed in the Python shell that Keras is actually part of TensorFlow.

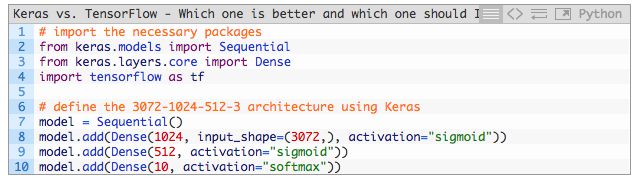

Keras in tf.keras allows us to use the standard Keras package to obtain the following simple feedforward neural network:

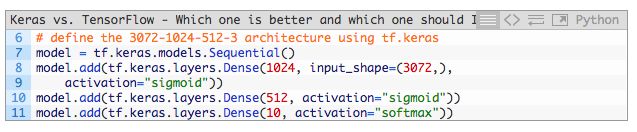

Next, based on a part of TensorFlow-tf.keras submodule, to implement the same network:

But does this mean you have to use tf.keras? So now to give up using the standard Keras package? of course not!

Keras is still as a library, separate from TensorFlow for independent operations, so there is still the possibility that the two will be separated in the future; however, we know that Google officially supports Keras and TensorFlow at the same time, and separation seems extremely unlikely.

But the point is:

If you prefer to program solely based on Keras, then do so, and you can continue to do so in the future. But if you are a TensorFlow user, you should start to consider Keras API because:

It is created based on TensorFlow

It is easier to use

When you need to use pure TensorFlow to achieve specific performance or functionality, it can be used directly in your Keras.

â–ŒSample data set

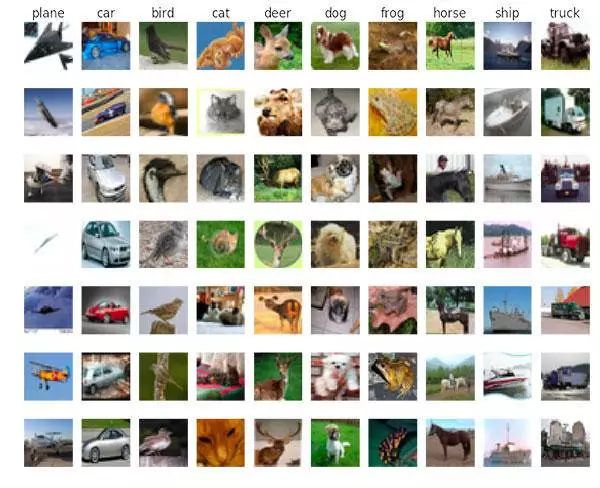

The CIFAR-10 data set has 10 categories. We use this data set to show the views of this article

For the sake of simplicity, we will train two separate Convolutional Neural Networks (CNN) on the CIFAR-10 dataset. The scheme is as follows:

Method 1: Keras model with TensorFlow as the backend

Method 2: Use the Keras submodule in tf.keras

During the introduction, I will also show how to write custom TensorFlow code into your Keras model.

The CIFAR-10 data set includes 10 individual classes, 50,000 training images and 10,000 test images.

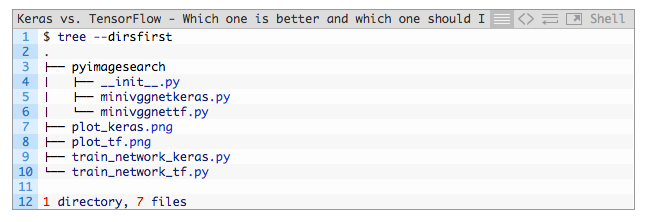

â–ŒProject structure

We can use the tree command in the terminal to view the structure of the project:

The pyimagesearch module is included in downloads related to web entries. It cannot be installed via pip, but it is included in the result after "Downloads". Let's review two important Python files in this module:

minivggnetkeras.py: This file is based on the MiniVGGNet network implemented by Keras, a deep learning model based on VGGNet.

minivggnettf.py: This file is a MiniVGGNet network based on TensorFlow + Keras (such as tf.keras).

The root directory of the project contains two Python files:

train_network_keras.py: training script implemented with Keras;

train_network_tf.py: The training script required for TensorFlow + Keras implementation is basically the same as the former; but we will still explain and mark the differences.

Each script will generate its own training accuracy and loss curve:

plot_keras.png

plot_tf.png

Next, I will introduce to you the MiniVGGNet network based on Keras and TensorFlow + Keras (tf.keras) and their training process.

â–ŒTrain a neural network with Keras

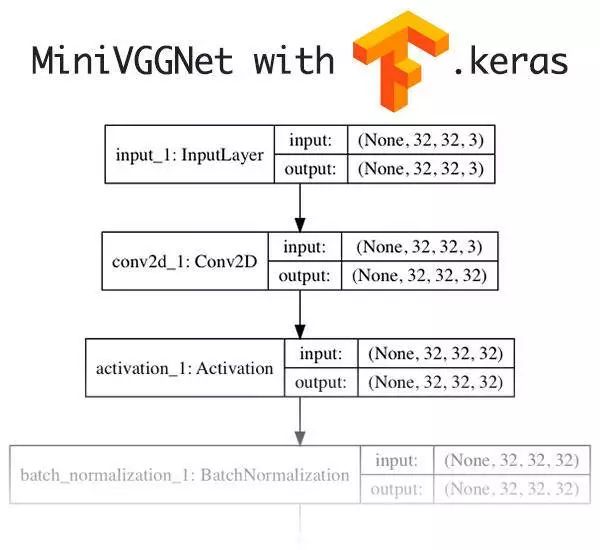

A miniVGGNet convolutional neural network structure implemented with Keras

The first step in training our network is to build the architecture of the network in Keras.

If you are already familiar with the basics of training neural networks in Keras, then let's start (if you don't understand this, please refer to the relevant introductory article).

Related link: https://

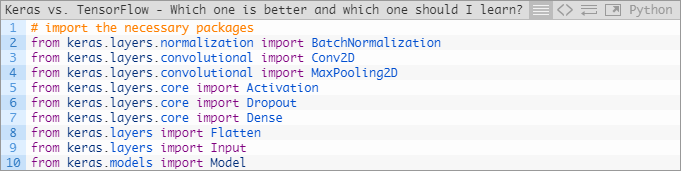

First, open the minivggnetkeras.py file and insert the following code:

Start building the model by importing a series of required Keras libraries.

Then, define a MiniVGGNetKeras class:

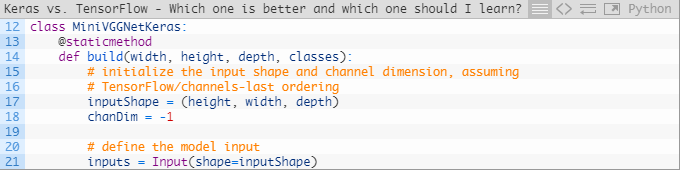

We define the build method on line 12 and define the inputShape and input parameters. We assume that the order is based on the channel last rule, so the last value in the inputShape parameter should correspond to the depth value.

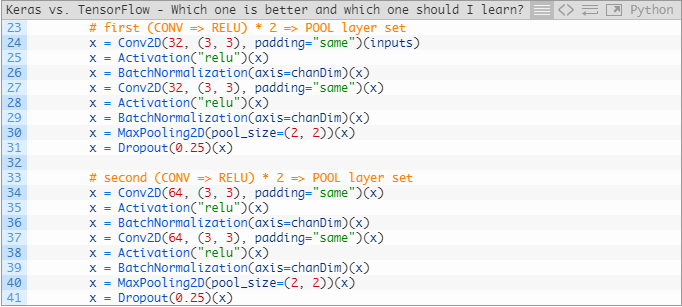

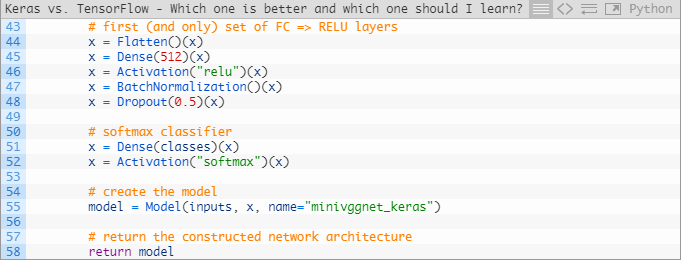

Let's start to define the main structure of the convolutional neural network:

From the above code, you can observe that we have stacked a series of convolution (conv2D), ReLU activation function and batch normalization before each application pooling (pooling) to reduce the convolution operation. Space dimension. In addition, we also use Dropout technology to prevent model overfitting.

For knowledge of layer types and related terms, you can refer to the previous Keras tutorial

https://

If you want to study in depth, recommend the book "Deep Learning for Computer Vision with Python"

https://

Then, add the fully connected layer (FC) to the network structure, the code is as follows:

We add the FC layer and Softmax classifier to the network. Then we define the neural network model and return it to the calling function.

Now we have implemented the definition of the CNN model in Keras. Next, we create a program script for training the model.

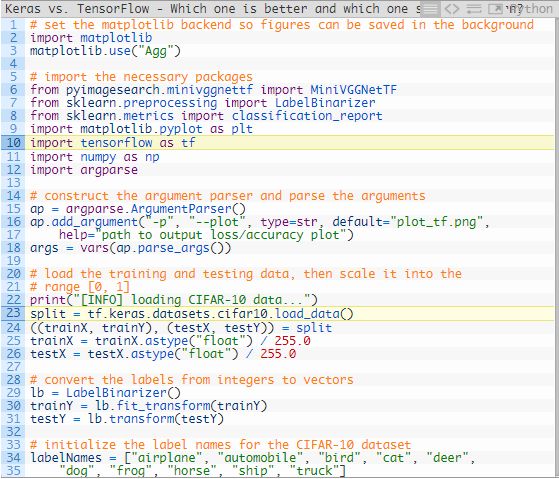

Open the train_network_keras.py file and insert the following code:

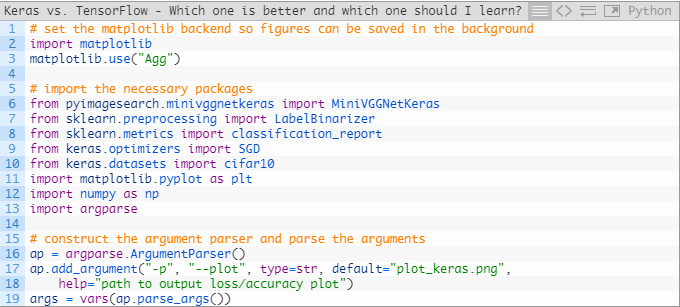

We first import the packages needed for our model training on lines 2-13 of the code.

have to be aware of is:

In line 3, set the backend of Matplotlib to Agg so that we can save the training graph as an image file.

In line 6, we import the MiniVGGNetKeras class.

We use the LabelBinarizer method in the scikit-learn library for one-hot encoding, and use its classification_report method to print out the classification accuracy statistics (corresponding to rows 7 and 8 respectively).

We import the database required for training in line 10.

How to use a custom data set, please refer to

https://

https://

In addition, we also parsed a command line parameter (output --plot path) on lines 16-19.

Below we load the CIFAR-10 data set and encode the tags, the code is as follows:

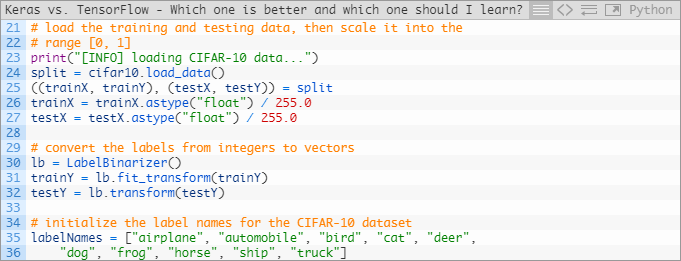

In rows 24 and 25, we load and extract the data required for training and testing, respectively, and at the same time transform the data into floating point + scale in rows 26 and 27.

In lines 30-36 we encode the labels and initialize the real labelNames.

The model definition and data set import work has been completed. Now we can start training our model, the code is as follows:

In lines 40-46, we set some parameters and model optimization methods required for the training process.

Then in lines 47-50, we use the MiniVGGNetKeras.build method to initialize our model and compile it.

Finally, start the model training program on lines 54 and 55.

Below, we will evaluate the network model and generate a result graph:

Here, we use test data to evaluate our model and generate a classification_report. Finally, we integrate the evaluation results and export the result graph.

It should be noted that usually here we will serialize the model and export our model so that it can be used in image or video processing scripts, but we will not introduce this part of the content in this tutorial.

If you want to run the above script, please make sure to download the source code of this article.

Then, open a terminal and execute the following commands to implement a neural network with Keras:

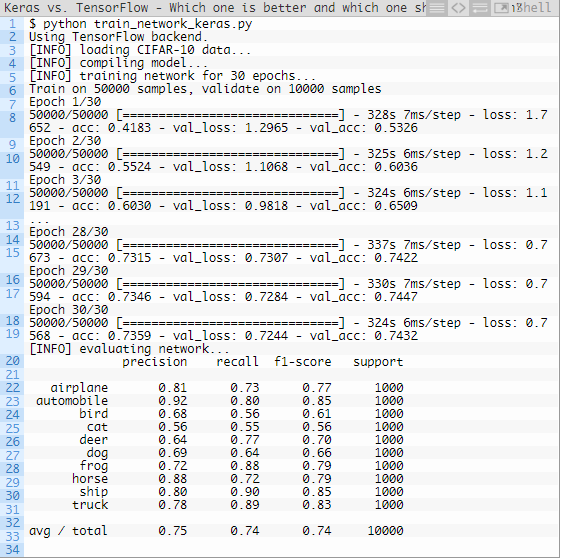

It only takes more than 5 minutes to run each training epoch on my computer cpu. The training results are as follows:

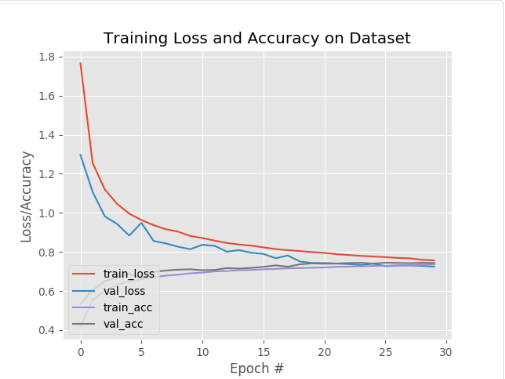

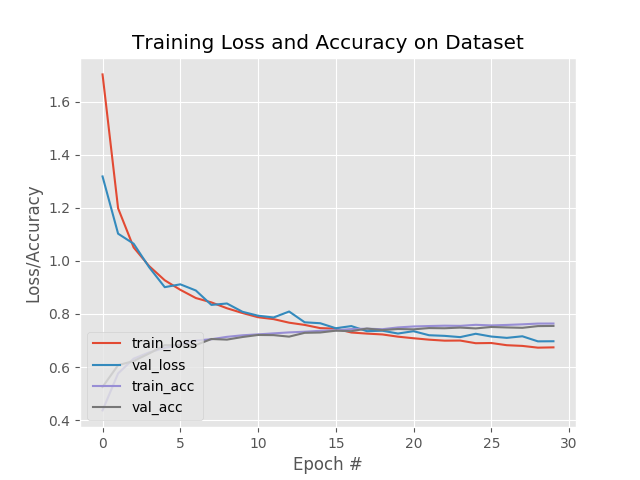

Use the neural network model implemented by Keras to draw the accuracy/loss curve of the training process with Matplotlib

As we can see from the output of the terminal above, our model achieves 75% accuracy. Although this is not the most advanced model, it can be much better than random guessing (1/10).

Compared to a small neural network, the results of our model are actually very good!

In addition, as we show in the output of Figure 6, our model does not experience overfitting.

â–ŒTrain a neural network model with Tensorflow and tf.keras

The MiniVGGNet CNN architecture built using tf.keras (a module built into TensorFlow) is the same as the model we built directly using Keras. Here, for demonstration purposes, I changed the activation function, and the other structures are the same.

Above we have been able to use the Keras library to implement and train a simple CNN model. Next, what we have to do is:

1. Learn how to use the tf.keras module in TensorFlow to achieve the same network architecture

2. Include a TensorFlow activation function in our Keras model, which is not implemented in Keras.

Now, let's get started.

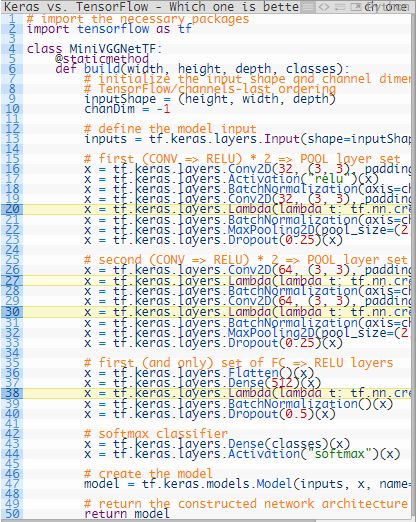

First, open the minivggnettf.py file, we will implement the TensorFlow version of the MiniVGGNet model, the code is as follows:

In this .py file, please note that in line 2 we need to import the required tensorflow dependency library, and tensorflow comes with the tf.keras submodule, which contains all the Keras functions that we can directly call.

In the model definition, I use the Lambda layer, as highlighted in yellow in the code, it can be used to insert a custom activation function CRELU (Concatenated ReLUs),

The activation function CRELU was proposed by Shang et al. in the paper "Understanding and Improving Convolutional Neural Network".

The CRELU activation function does not have a corresponding implementation in Keras, but it is possible in TensorFlow. You can use one line of code in the tf.keras module in TensorFlow to add the CRELU function to our Keras model.

Also note that: the CRELU function has two outputs, a positive ReLU and a negative ReLU, which are connected together. For a positive value x, the return value of the CRELU function is [x, 0]; for a negative value x, the return value of the CRELU function is [0, x]. For a detailed introduction to this function, please refer to the paper by Shang et al.

Next, we will use TensorFlow + Keras to define the program for training the MiniVGGNetTF model. Open train_network_tf.py and insert the following code:

In lines 2-12, we import the dependent libraries needed for the training process. Compared with our previous Keras version of the training script, the only change is that we imported the MiniVGGNetTF class and tensorflow as tf instead of using Keras. And in line 15-18 is our command line parameter parsing part.

As before, we load the data required for model training on line 23. The rest of the script is the same as the training process of the previous Keras version, that is, extracting and separating the training and test set data and encoding our labels.

Next, let us start training our model, the code is as follows:

In lines 39-54, there is a difference from the Keras version of the training process, we highlight it in yellow, and the rest are the same.

In lines 58-73, we use test data to evaluate our model and plot the final result.

As you can see, we just changed the method used (using tf.keras) to achieve almost the same training process.

Then, open a terminal and execute the following commands to train a neural network model using tensorflow + tf.keras:

After the training is completed, you can get a similar training result graph as above:

The neural network model implemented with Tensorflow + tf.keras, and Matplotlib draw the accuracy/loss curve of the training process

It can be seen that we use CRELU instead of the original RELU activation function to obtain an accuracy of 76%; however, the 1% increase in accuracy may be caused by the random initialization of the weights in the network, which requires a cross-validation experiment To further prove whether the CRELU activation function can indeed improve the accuracy of the model. Of course, raw accuracy is not the focus of this section.

On the contrary, what we need to pay more attention to is how to replace the standard Keras activation function with the TensorFlow activation function inside the Keras model!

In addition, you can also use custom activation functions, loss/cost functions or layers to perform the same operations above.

â–ŒSummary

In today's article, we mainly discussed the following issues regarding Keras and TensorFlow:

Should I use Keras or TensorFlow in my project?

Is TensorFlow better than Keras?

Should I spend time learning TensorFlow or Keras?

In the end, we found that trying to choose between Keras and TensorFlow became an increasingly irrelevant issue. The Keras library has been directly integrated into TensorFlow through the tf.keras module.

Essentially, you can code the model and training process through the easy-to-use Keras API, and then use pure TensorFlow for custom implementation.

Therefore, if you are about to start learning deep learning, or are entangled in the next project is "Keras or TensorFlow?" or just thinking about the two of them, "Which is better?" These questions, now is the search for answers and motivation It's time, and my advice to you is very simple:

Not much to say, let's start!

Enter import keras or import tensorflow as tf in your Python project (so you can access tf.keras) and start the follow-up work.

TensorFlow can be directly integrated into your model and training process, so you can use TensorFlow or Keras directly in your project without comparing features, functions or ease of use.

â–ŒReader's question

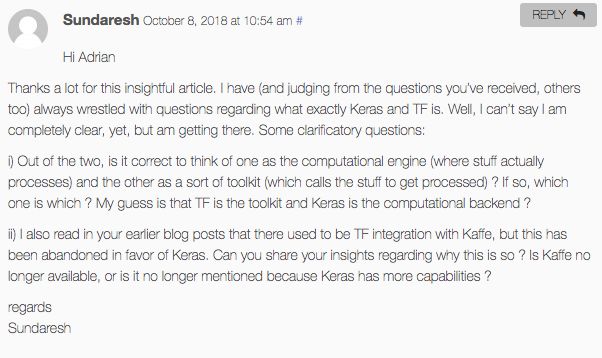

In this regard, some readers have raised sharp questions:

Based on the above and my understanding, many developers are still entangled in the question of what Keras and TensorFlow are. Maybe I don’t know much about it, but I still want to ask some clarifying questions:

One is, of the two, is it correct to regard one as a computing engine and the other as a toolkit? If so, I guess TensorFlow is the toolkit and Keras is the computing backend?

Second, you also mentioned the integration of TensorFlow and Caffe, but Caffe has been abandoned in order to support Keras. Can you share the reasons why you are doing this? Is Caffe no longer available, or is it because Keras has more features?

In response, Adrian Rosebrock responded:

Yes, Keras itself relies on backends such as TensorFlow, Theano, CNTK, etc. to perform actual calculations.

Caffe still exists, but other functions have been broken down into Caffe2. TensorFlow has never been part of Caffe. We still use Caffe, especially researchers. But practitioners, especially Python practitioners, prefer programming-friendly libraries such as TensorFlow, Keras, PyTorch or mxnet.

Active Matrix Oled,Oled Display Module Active Matrix,Active Matrix Organic Light Emitting Diode,Oled Lcd Display Modules

ESEN Optoelectronics Technology Co., Ltd, , https://www.esenlcd.com